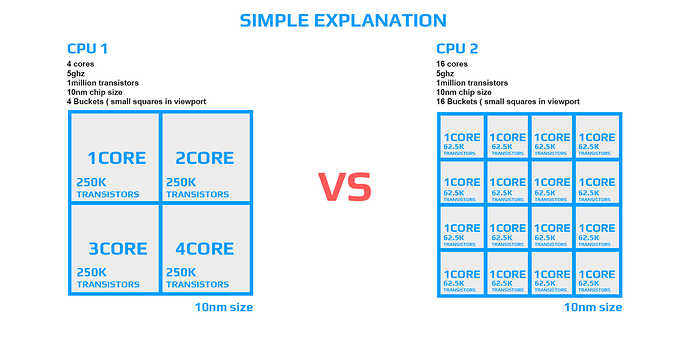

I think my english is so bad that I probably dont make it clear sometimes when I read my reply I cant even know what’s going on  with that core count when it comes to CPU, I explaining why you cant compare two same CPU with different core counts and why sometimes is faster CPU with less core count. I use example same CPU with same amount of transistors. So two CPUs with same amount of transistors but different amount of cores will have the same SPEED and only difference is gonna be in the preview when you got more buckets. But if you got 20 buckets or 2 buckets moving in the viewport doesnt matter cause both CPU got same amount of transistors so they can calcualte exact same amount of information.

with that core count when it comes to CPU, I explaining why you cant compare two same CPU with different core counts and why sometimes is faster CPU with less core count. I use example same CPU with same amount of transistors. So two CPUs with same amount of transistors but different amount of cores will have the same SPEED and only difference is gonna be in the preview when you got more buckets. But if you got 20 buckets or 2 buckets moving in the viewport doesnt matter cause both CPU got same amount of transistors so they can calcualte exact same amount of information.

Example:

CPU 20 cores 1 Billion transistors

CPU 10 cores 2 Billion transistors,

Faster one is with 10 cores since he got double the value of computing / mathematical power of transistors, which calculating results. Yes you will have less small squares on the screen in the viewport but thats just doesnt matter. The speed of this small squares is based on the value how much information they can process.

Sorry, I am not good in Photoshop  But as you can see on the picture, why you should think the more cores with less transistors should beat less core cpu? yes less cores but they got 4X more computing power.

But as you can see on the picture, why you should think the more cores with less transistors should beat less core cpu? yes less cores but they got 4X more computing power. This is what I try to explain in first post, I mention transistors. The rendering speed of this CPUs is exactly same.

You can also use this CPU image as example of bucket size in the viewport smal squares in the vray. yes you can have small 16 but if you got only 4 of them with 4 times more power so it will be the same.

In general Terms

In these days more cores is actually more transistors cause companies designed it this way, they are doing much LARGER CPUS when you look how big they are, its the same nanometer process but they are bigger so they are able to put more cores. You can still find some ultra expensive intel xeons with double or even 4 times more cores than your intel i9 13900 and still this mainstream intel 13900 kick that expensive Xeons, even when you put two of them on motherboard.

I am also using V-ray but since V-ray 5 when they introduce adaptive bucket size, I don’t think it makes difference. If you remember on the older versions you have to set up bucket size to smaller value so that end rendering was faster when other cores got job done but there was no space for bucket size. I am not mentioning this squares but I was trying to explain why core count doesnt count when it comes to speed in CPU, bucket size from Vray is representation of cores as you mention.

More cores give you only faster preview since they are calculating smaller size, it was main topic before Vray 5 if I remember right in this case

I know KeyShot cant use RAM when you rendering with GPU it was just example, cause some engines can provide this, but its slow since they have to boot everything from pcie lines and bus feed. No ones even using it this way.

Why more core generally is better?

You can make them running faster so not just 5ghz but 6ghz, yes CPU is gonna be more hotter and will drain more power which is the same, but you can effectively control more cores and throttle individual down, so thats one of the many reasons why CPUs got more cores, you can throttle individual cores down without losing to much power, but if your 4 core CPU reach hot temperature and system start throttle one core down you loose 25 percent of power, reason why so many cheap laptops are super slow, they overheat to fast, and the regulation of that heat comes at big cost since they got less cores.

I don’t know if I can make it even more clear, but what I was pointing out, cores doesnt really matter in terms of speed, reason why they are doing more cores is to put more transistors so they bump the size of the chipset or they create smaller nanometers technology…

GPU cores and CPU they are different, cpu doing much more precise operation while GPU cant, there are specific task which GPU just cant do, some mathematical things, I dont even know what it is, but you can google it.